This article originally appeared on NeuroTechX Content Lab on Medium, Sept 14, 2024.

Every time a neuron communicates to its many neighbors, the cell momentarily changes its membrane potential — the difference in electric charge between the inside and outside of the cell — ever so slightly, so as to let a volume of charged particles, also known as salt ions, flow into the cell. This change in salt ions on each side of the membrane represents the electrical activity of the neuron. If we view the brain as a salty meat computer, then it follows that a neuron is a semiconductor that switches into an on position for an unfathomably short period of time before returning to off, transferring information in the process. These are spikes — and they really make you think.

In popular science depictions, spiking neurons are usually firing at regular intervals. Reality, however, is rarely so tidy. While it’s unclear how much information any particular spike contains, we do know the frequency of spikes, and their timing, are fundamental to information flow in the brain.

Neuroscientists are very interested in these discrete, unitary spikes, which serve as a reductive unit of information in the brain — there are no partial spikes carrying incomplete information; it’s all-or-nothing. If we’re interested in individual spikes, and we believe their timing is critical, then how can we represent them clearly and intuitively?

Spike Raster

The raster plot is a typical means of communicating the activity of a small number of neurons over time. This graph can help to identify patterns of cell firing responses, such as stimulus-evoked bursts of neural activity, as well as more coordinated oscillations between neighboring layers of the brain, like hippocampal sharp wave ripples (SWRs). Raster plots work well to visualize the spiking activity of a small number of brain cells. However, when scaling up to the volume of data acquired by modern multi-electrode arrays, with 100s of channels recording continuously over many hours or days, the raster plot can become overwhelming — and neural dynamics more difficult to interpret.

The raster plot is a simple and effective way of representing single neuron spikes as a function of time, so a natural option for presenting longer spike trains is to extend the static raster plot into video. One commonly used option is to portray time redundantly, where it is encoded spatially onto the x axis and temporally in the animation, directing the viewer to move their eyes along the screen at the speed of video playback. Alternatively, we can set a constant-width time window, shown in the left panel in the video below from 13 seconds onwards, so that the viewer’s eyes may remain focused at the same portion of the screen.

Spike Sonification

Video by itself can feel lacking as a means to fully communicate neural activity, so it can be a helpful enhancement to add sound to highlight the spikes. This technique traces back to the early days of electrophysiology, in which a patch-clamped neuron would be connected to an electrode, itself hooked up to an amplifier which would transform the neuron’s tiny changes in voltage into changes in pressure audible to the human ear.

This spike sonification technique lets observers perform pattern detection using our exquisitely-tuned auditory system; the same system that allows us to notice subtle differences in the vocal inflections of a friend in distress, to love Chopin, and to hate Nickelback. We can use sound to supplement neural activity visualizations by employing our finely tuned auditory sensory apparatus to hear patterns in the neuron firing data, as well as see them. While this method may not fuel new discoveries by enabling researchers to identify previously undetected patterns, it can enable a new appreciation for experiencing neurophysiology as a multi-sensory experience.

My experiments have involved using MIDI-generators to sonify neural spike data as captured through electrophysiology and optical physiology (e.g. calcium ion imaging using fluorescent microscopy) methods. Below, I share examples from these projects, learnings about how to perform spike sonification successfully, and thoughts on design considerations.

Pitch

Modern investigators are afforded much more creative flexibility through their contemporary tools than analogue physiologists of the past were offered by their simple patch clamps and guitar amps. Thanks to technical advances in multi-electrode recording probes and statistical processing techniques (such as spike sorting), today’s neuroscientists are able to distinguish different neurons and their ensembles by the characteristics of their firing. This allows an auditory property — in this example, pitch, which is defined in layman’s terms as the highness or lowness of a tone — to convey information about the neural activity to the listener of the sonic representation of that activity. My award-winning project A Simian Symphony looked into sonifying spike data recorded from various pre-defined layers of the hippocampus during a specific pattern of activity, the sharp wave ripple or SWR (you can read more about the project here and here). For this activity, the anatomical depths of the active cells is a variable of interest that reveals much about the nature of the activity as a whole. By setting the sonification pitch associated with each spike based on a known experimental feature — in this case, the depth of the spiking cells — it was possible to hear patterns of alternating activation between brain layers.

While many listeners may hold a certain expectation that spike sonification will provide an aesthetically pleasing melody, as revealed by the above video, this is not always the case. A technical challenge exists here in that a finite range of pitches are audible to humans. Restricting this finite range further to draw from a pleasant scale, like the major scale, may limit the feasibility of the sonification of the spike data. Take for instance, the MIDI generator, possibly the most famous example of a digital sonification tool. Of the 128 discrete pitches that can be generated by this system, only 50 count within the major scale.

Another of my spike sonification projects, A Cubic Millimeter of Mouse Brain, a sample of which is shown below, received much feedback to do with the spike-sonfied audio track being less than fully appreciated by all listeners. This video presented an audiovisual representation of the firing of 55 functionally-matched neuron models (from the MICrONS cubic millimeter project) within a heavily interconnected cortical column, first proposed by Vernon Mountcastle in 1955 as the functional unit of computation in the brain.

Tempo

Another parameter critical for spike sonification is the speed at which spikes occur. Near real-time temporal playback is helpful to facilitate comparisons between brain activity and behavior, such as the movement of an animal. The MIDI-generated example below is played at real-time speed. While global patterns of neural activity can be heard, the playback is perhaps a little too fast to clearly detect the sequences of spikes.

However, real-time playback speed is not necessary or even useful for representing all cases of neural spiking activity, for example when it is the order of sequence of neurons firing that is most interesting. For example, in A Simian Symphony, the animation starts using real-time playback speed to show the data in as close to a raw format as possible. However, the research team behind this project were most interested in the order of cell ensembles’ activations during specific ripple events. To better reveal this ordering, the sonification playback speed was slowed down to 1/200th of the real-time speed, allowing the listener to more easily appreciate the single notes in their sequence.

Beyond spikes: field potentials as a neural symphony

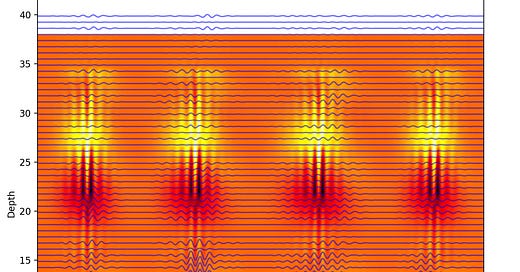

The discrete, percussive sound produced by hammering a piano key or a steel drum lends itself very well to all-or-nothing events like neuron spikes. However, many of the more interesting signals in the brain are rhythms produced by large-scale fluctuations in voltage arising from the collective behavior of many neurons. These fluctuations are continuous variables described by their local field potential (LFP) and the derivative current source density (CSD). Respectively, these terms describe the direction and intensity of the brain tissue’s polarization (how strongly positive or negative is it?) and the distribution of polarization across the tissue (where is it most strongly polarized, and how does that polarity drop off the further you travel from the source of the polarization?). These tissue-scale measures of changing electrical potential provide a broader view of neural activity beyond individual spikes. In this case, the brain activity signal of interest is continuously recorded from multiple electrode channels that are physically spaced throughout the brain tissue being studied. This rich, continuous, and multi-channel data is best represented by a collection of sustaining instruments — instruments that can hold a note for an extended period while varying qualities of the note, such as volume or pitch — for example a violin or horn section.

The audio recording included below presents a symphony generated using the data plotted above. The pitch of each violin channel encodes the spatial depth of that channel’s corresponding electrode and neuron, while the violin’s volume varies across time according to the changing voltage recorded by each electrode. Using this technique, it is possible to simultaneously see and hear the fluctuation in signal between layers of the hippocampus during the SWR.

In the final version of A Simian Symphony, the unit spike sonification (piano notes) and visualization (flashing points) were overlaid onto the LFP and CSD audiovisual data (blue-red background and violin symphony). Since the SWRs occur very rapidly (within 50 milliseconds), the playback speed was slowed down significantly to allow the viewer to appreciate the dynamics of some of the most synchronous oscillation patterns in the brain.

Conclusion

The described experiments have certainly not turned all of the knobs and dials of the parameters that can be played with when performing data sonification for neural activity. However — they reveal that adding an audio layer to graphics can help to engage the viewer more deeply, by turning the data visualization into a multi-sensory, immersive, and intuitive experience.

Written by Tyler Sloan, edited by Shubhom Bhattacharya and Benjamin Schornstein, with headline image by Lina Cortéz.

Tyler Sloan is a creative technologist whose job description is somewhere between a data scientist and 3D artist, depending on the day. He spent his PhD studying neuro-development at McGill, then founded Quorumetrix, a scientific data analysis and visualization studio in Montreal. He bridges both the academic and artistic worlds by transforming laboratory data into engaging graphics, without compromising on accuracy.

Shubhom Bhattacharya is an engineer who works on a clinical trial for vision restoration for a startup in New York City.

Other media

See the full length versions of A Simian Symphony' and ‘A Cubic Millimeter of Mouse Brain’ on the Quorumetrix Studio YouTube channel.

Research article: Circuit dynamics of superficial and deep CA1 pyramidal cells and inhibitory cells in freely moving macaques (Abbaspoor and Hoffman 2024 Cell Reports)